This posts introduce basic concepts and derivation of generative modeling.

Generative Models

Depending on whether annotation is available, machine learning mathods can be cetergorized as supervised and unsupervised learning. The different modeling over the distribution can roughly divide these methods into two catergory: generative and discriminative. In supervised learning, the most common one is discriminative which models the conditional probability parameterized by . With learnt from back propagation over the samples, models directly predict . Gernerative model, on the other hand, models the joint probability of and predict . Knowing what generative model cares about, we focus on the unsupervised generative model that’s used to generate images as real as possible.

Modeling over p(x)

Given a distribution parameterized by , we denote the true distribution as $p_{\theta^}(x)xp_{\theta^}(x)\theta^\thetap_\theta(x)x\sim p_{\theta^}(x)$.

Empirically, this equals to maximize the likelihood of joint probability of all training samples:

This can be rewrite as maximization of log likelihood and be approximated by the samples drawn from :

This can also be seem as maximizing the expection of log likelihood with respect to , which can be rewrite as minimization of cross entropy between the two distributions. This equals to minimization of KL Divergence between the two distributions since the entropy of is fixed as a constant:

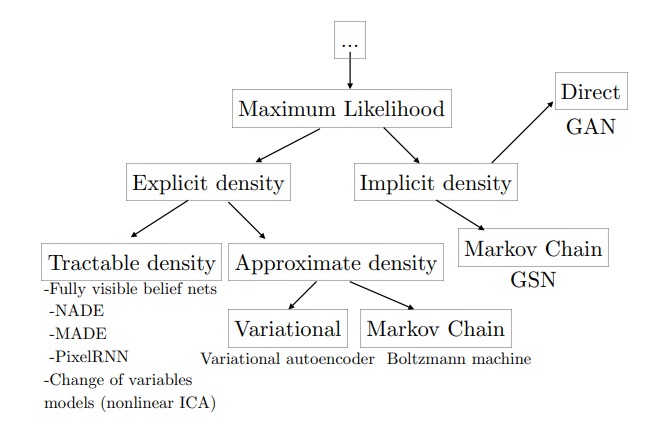

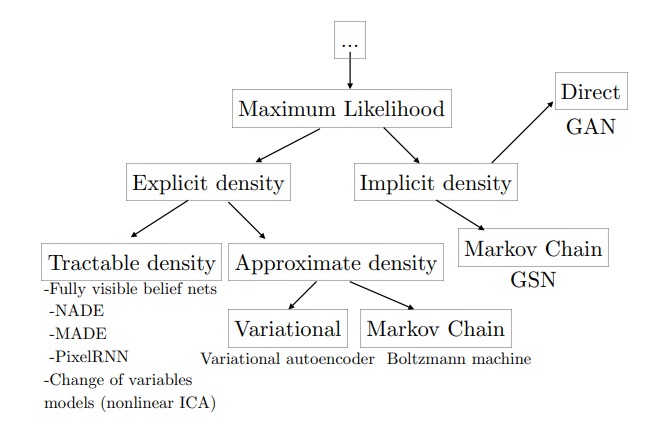

A taxonomy of deep generative models

We refer to NIPS2016 2016 Tutorial on GAN by Ian for taxonomy of generative models.

Now that we have the goal: maximization the log likelihood of . One of the biggest problem is how to define . Explicit density models define an explicit density function , which can be directly optimized through backprop. The main difficulty present in explicit density models is designing a model that can capture all of the complexity of the data to be generated while still maintaining computational tractability.